A real walkthrough showing how pipeline design and iterative experiments improve document extraction accuracy on real documents.

Most document AI systems look accurate in demos but struggle when documents are bundled, inconsistent, or messy. This experimentation workflow is designed to surface those issues early — before models are deployed to production. A walkthrough of three real extraction experiments, illustrating how pipeline design, document structure, and iteration directly impact production accuracy.

Document extraction accuracy is almost never perfect on the first run. Reaching production-ready performance requires visibility into how schemas behave, where mismatches occur, and how changes affect results over time.

In production, accuracy breaks for predictable reasons — mixed document types, layout variation, edge cases, and schema drift. This workflow is designed to make those failure modes visible and measurable, rather than hiding them behind metrics.

This experimentation framework allows Invofox to:

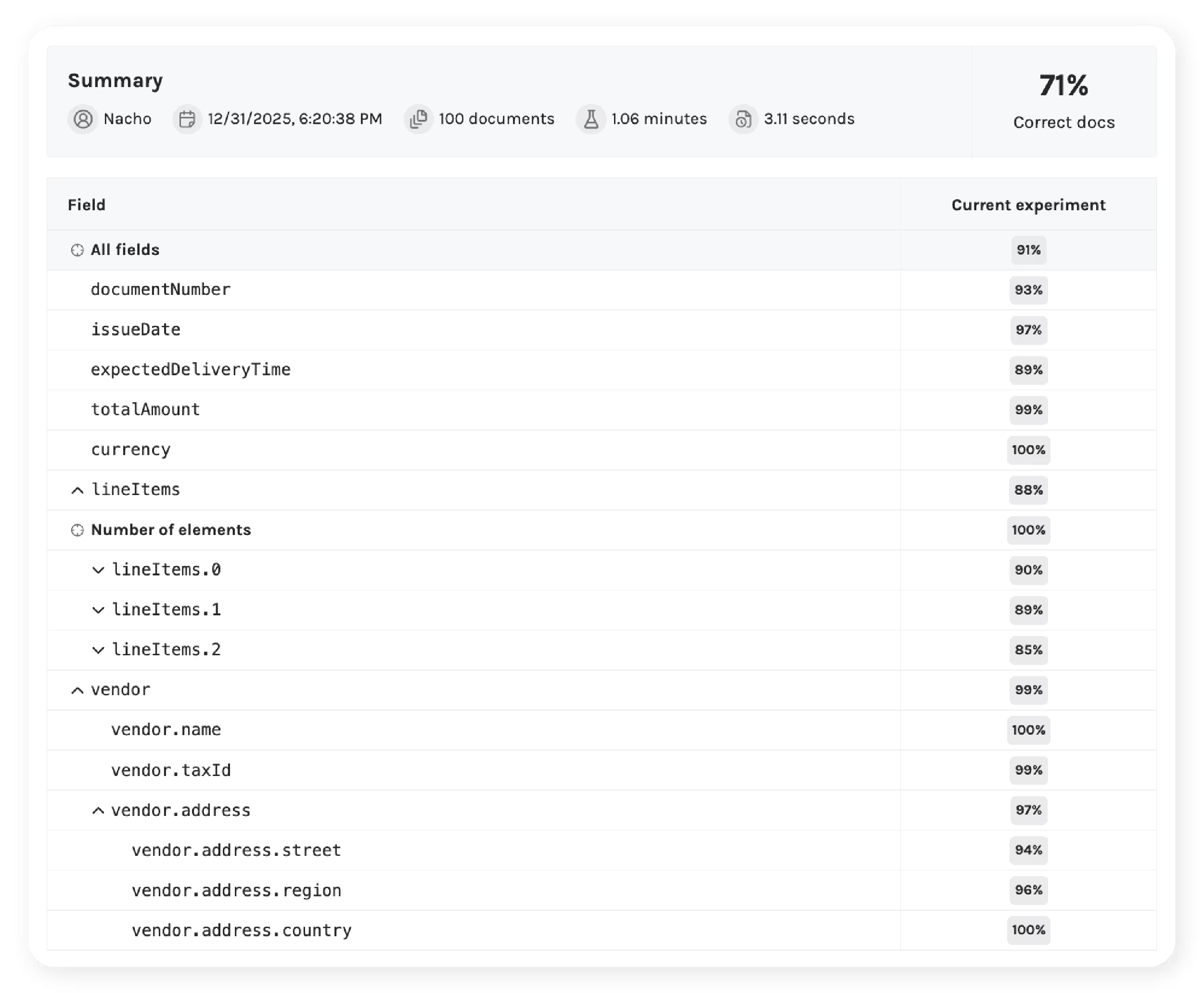

Measure accuracy at the field and document level.

Learn more about how we measure accuracy.

Understand the root cause of errors instead of guessing.

Compare changes across experiments with concrete metrics.

Decide with confidence when a model is ready for production for a specific use case and document set and updating to new model releases when they are available.

The first experimentation cycle starts by running a simple extraction pipeline on client-provided documents and comparing the output against their ground truth.

At this stage, teams can observe:

Field-level accuracy across all extracted data

A first signal of which fields are stable and which ones degrade

A baseline to compare against future iterations

For each experiment, extracted values are compared directly against client-provided ground truth (the correct, expected values for each field). Mismatches are classified into explicit error categories to make failure modes visible and actionable, including:

OCR noise and character-level errors.

Semantically equivalent values expressed differently.

Incorrect field assignments or missing values.

Structural issues in nested fields or arrays.

This document-level view makes it possible to understand why a field failed, not just that it failed.

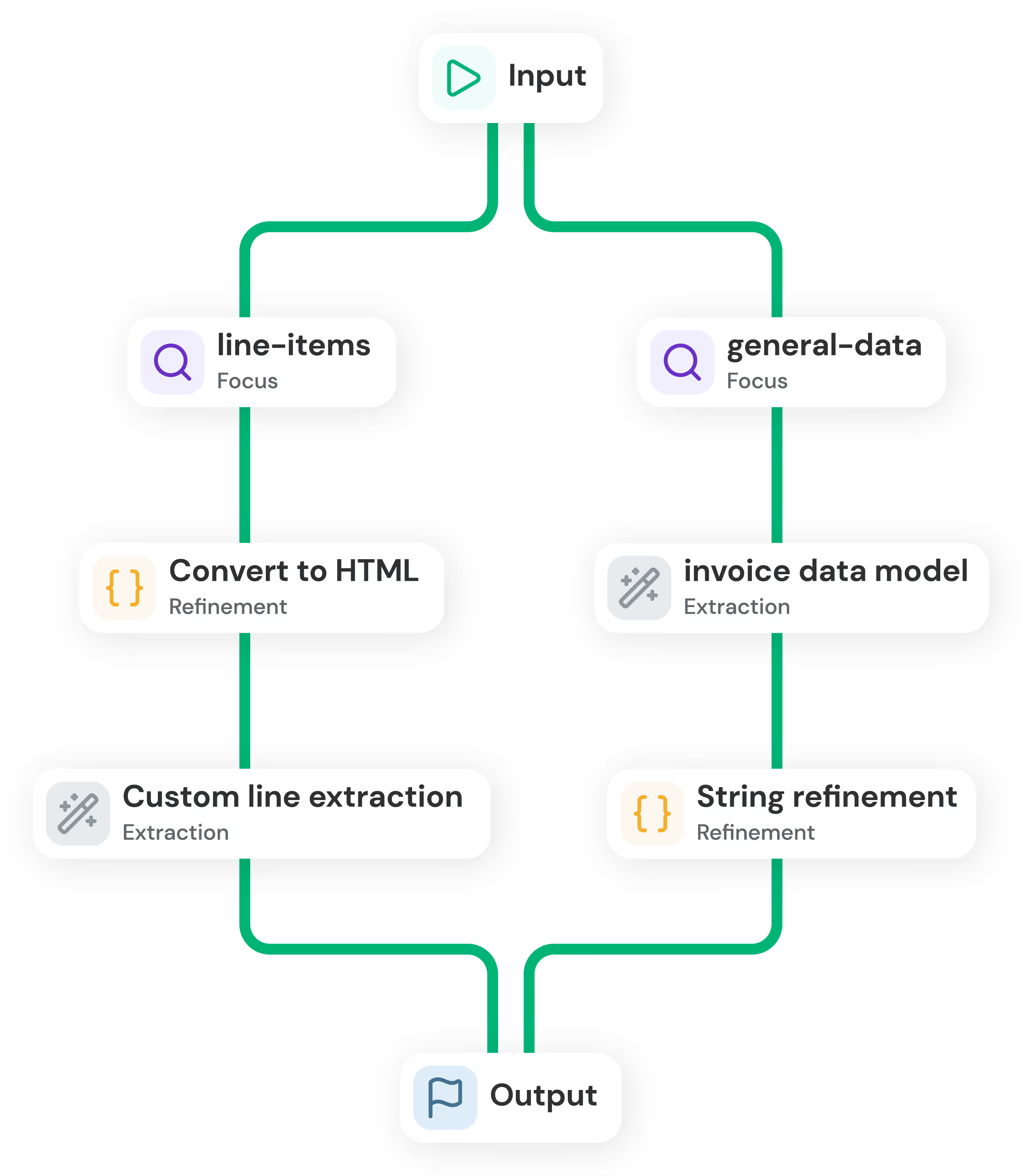

Based on this analysis, we apply targeted adjustments to the extraction pipeline, including the model, schema design, and post-processing logic. Common strategies include:

Focused extraction: splitting complex schemas so different models extract specific sections.

Input processing: converting inputs to HTML or Markdown to better align with model behavior

Field-level refinement: applying normalization, post-processing, or custom logic to unstable fields

Model specialization: running different models, fine-tuned per document type or use case, to improve accuracy

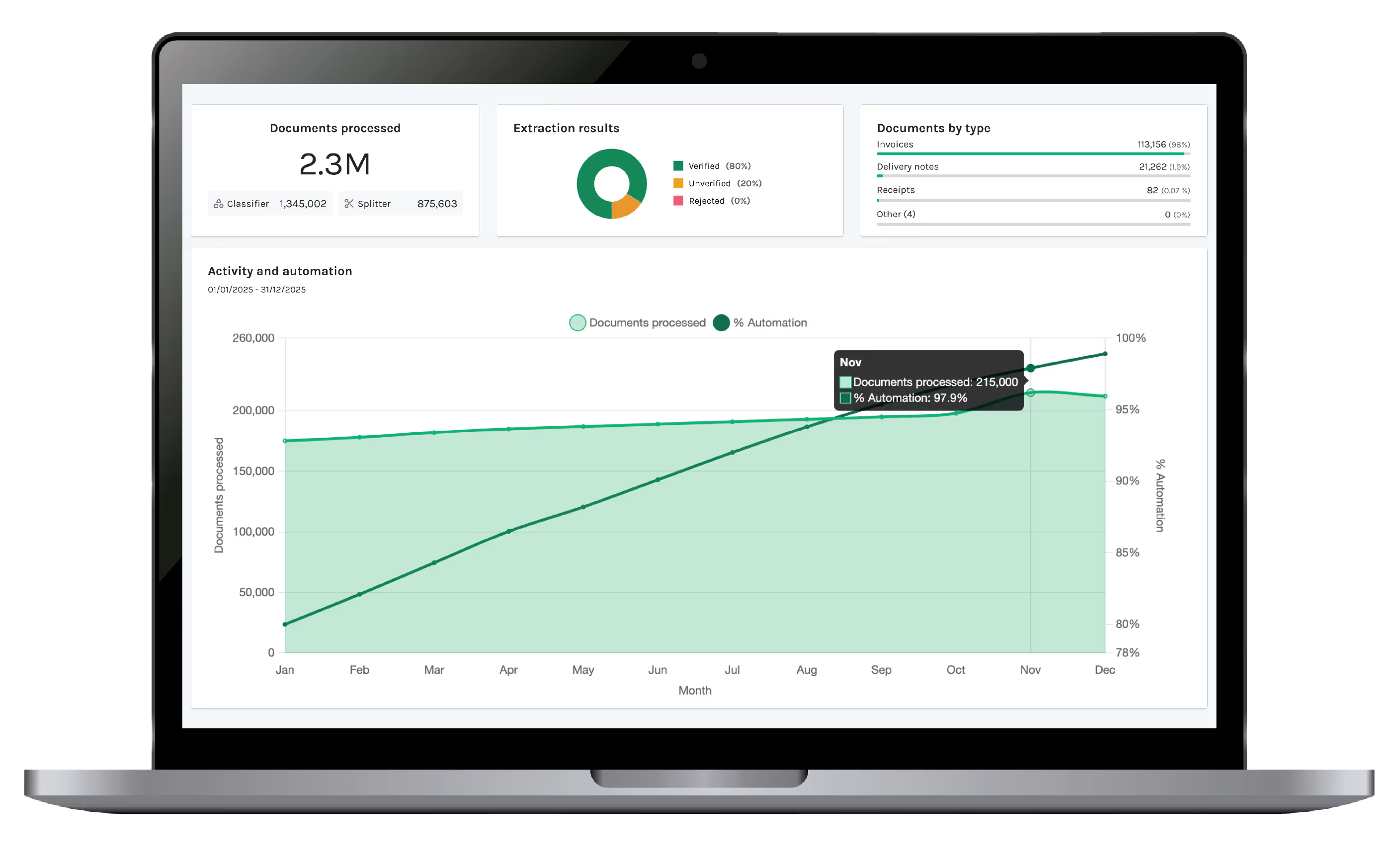

Before deploying to production, improvements are validated under production-like conditions to ensure they generalize beyond the initial dataset.

Test performance across unseen layouts, suppliers, and document variants.

Introduce new layouts and edge cases.

Apply production-scale document volumes to detect accuracy or performance degradation.

Once deployed to production, accuracy is continuously improved using real-world data and client feedback.

Incorporate client corrections and feedback into new iterations.

Monitor accuracy trends and detect regressions over time.

Automatically adapt pipelines as documents, layouts, and requirements evolve.

Most document AI systems are evaluated in isolation on clean inputs, limited datasets, and ideal conditions. But production accuracy breaks when documents are mixed, layouts vary, and schemas evolve over time.

This experimentation workflow exists to close that gap.

Rather than treating experimentation as an offline or one-time step, Invofox makes it an integral part of the document intelligence platform — connecting input handling, extraction pipelines, accuracy measurement, and iteration into a structured workflow.

This allows teams to:

Understand why accuracy changes

Detect regressions before they impact downstream systems

Validate improvements across real document variability, not cherry-picked samples

Confidently promote pipelines to production and safely adopt new model releases

Automatically separates and classifies mixed, bundled documents so extraction pipelines start from clean, correctly structured inputs. Learn more

Measures and compares field-level and document-level accuracy across experiments to make performance changes explicit and repeatable. Learn more

Carries validated improvements forward as schemas, document sets, and models evolve, without reintroducing regressions. Learn more

Track accuracy trends, stability, and regressions over time to understand how pipelines behave beyond a single experiment. Learn more

Run extraction and experimentation workflows without retaining documents or extracted data for sensitive or regulated use cases. Learn more

The fastest way to understand real-world document intelligence workflows is to observe how structured experiments behave on your own documents—across iterations, document sets, and production-like conditions.