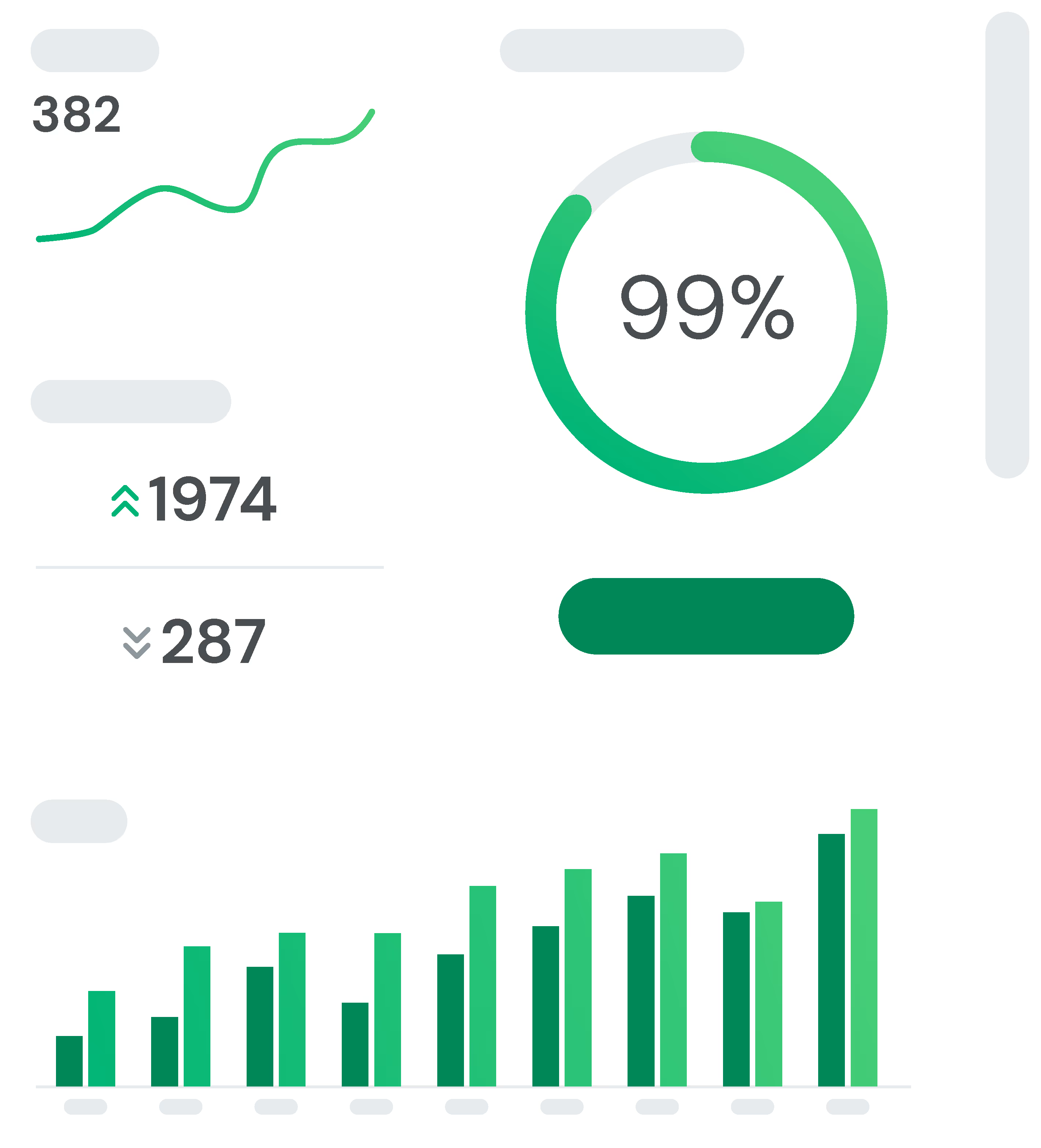

See how we evaluate, normalize, and validate data extraction accuracy across millions of documents using a transparent, ground-truth benchmarking process built for real-world evals.

Trusted by 100+ companies to validate document parsing and data extraction accuracy.

Each individual unit processed in Invofox. In most cases, a document corresponds directly to the original uploaded file; however, when splitting is enabled, a single file can be divided into multiple documents that are processed independently.

The set of documents reviewed and corrected by the client, used as the verified reference dataset for evaluating model performance and guiding iterative model improvements.

The evaluation stage where model outputs are benchmarked against the ground truth, calculating field-level and document-level accuracy, precision, recall, and detailed error metrics such as false positives and false negatives.

Document data rarely looks identical, even when it’s correct. Our accuracy evaluation logic adapts to each data type to ensure comparisons remain fair and consistent:

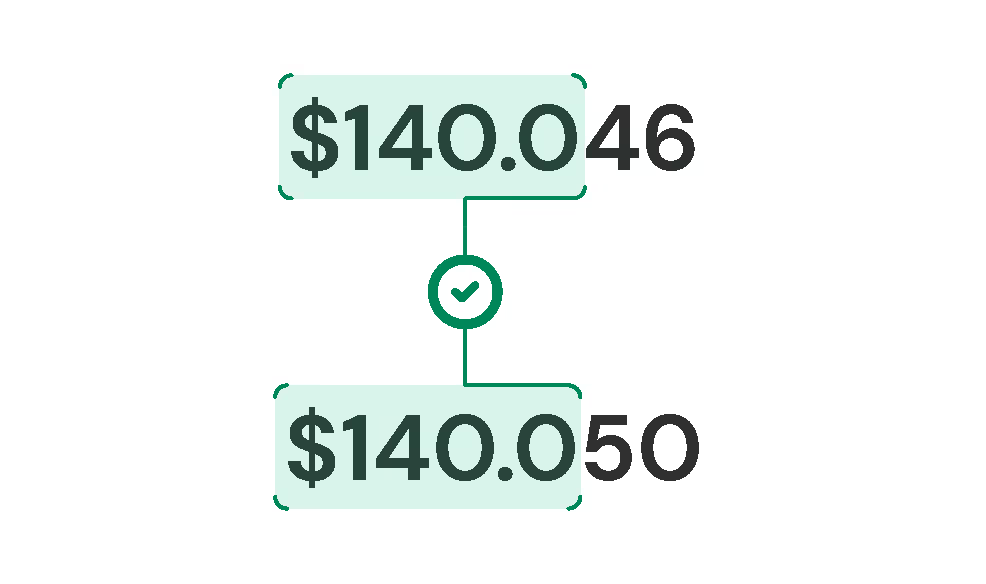

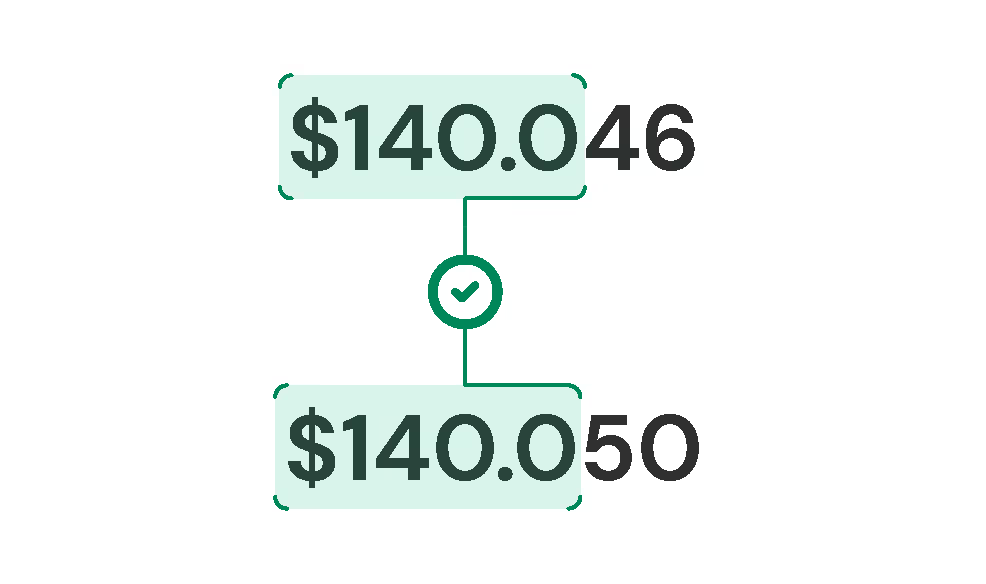

Compared within tolerance ranges

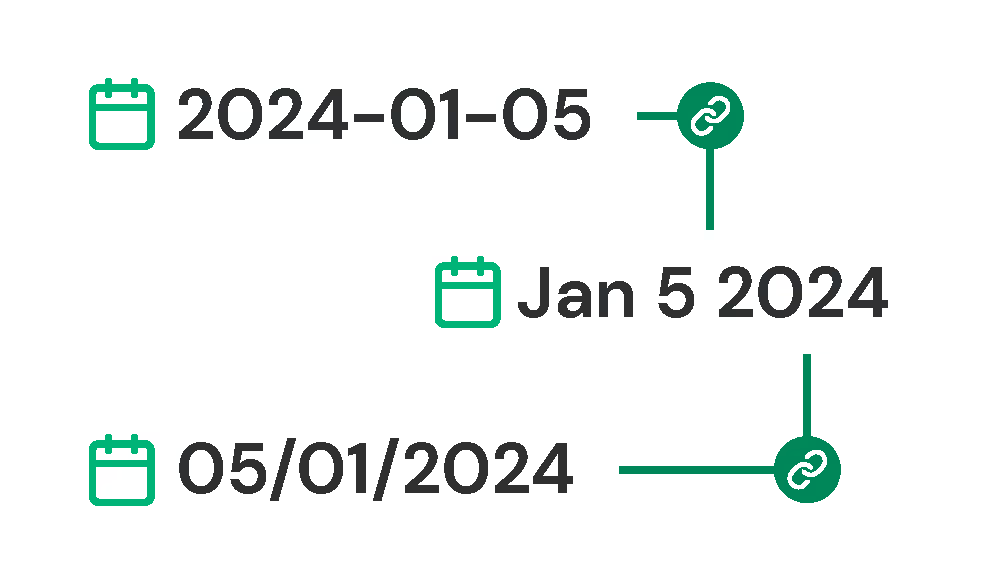

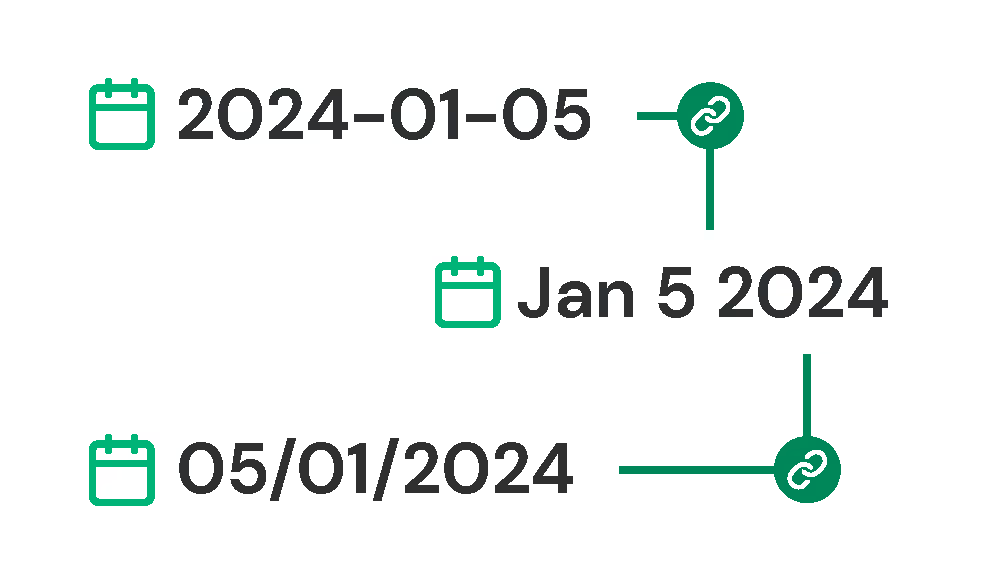

Standardized to avoid time-zone mismatches.

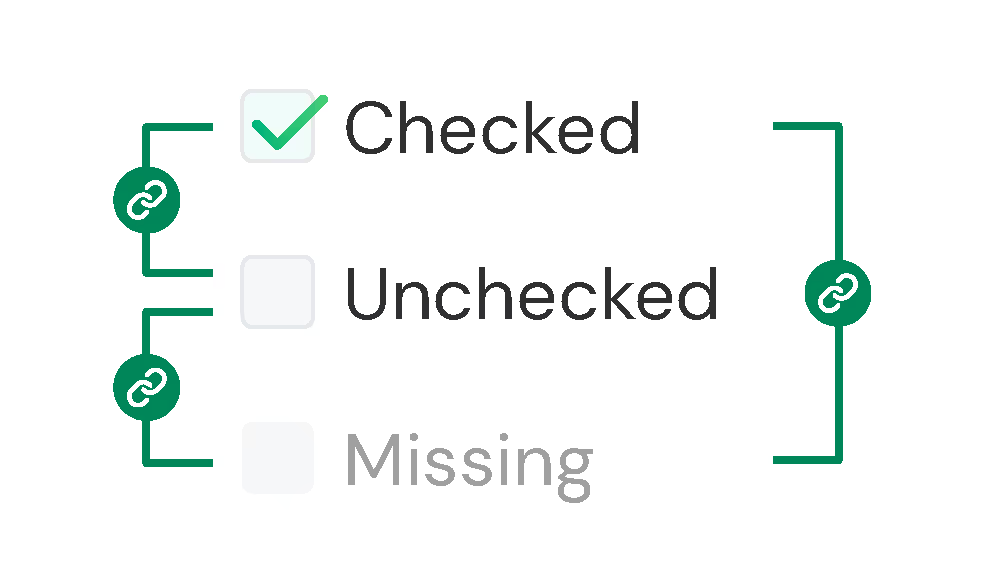

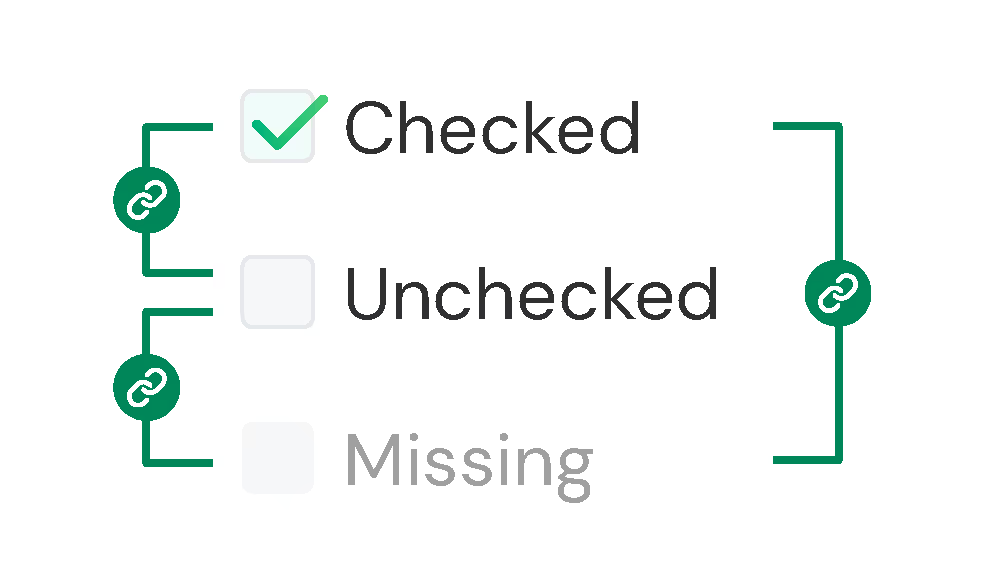

Account for missing or unchecked states.

Evaluated by content, not order (unless order is business-critical).

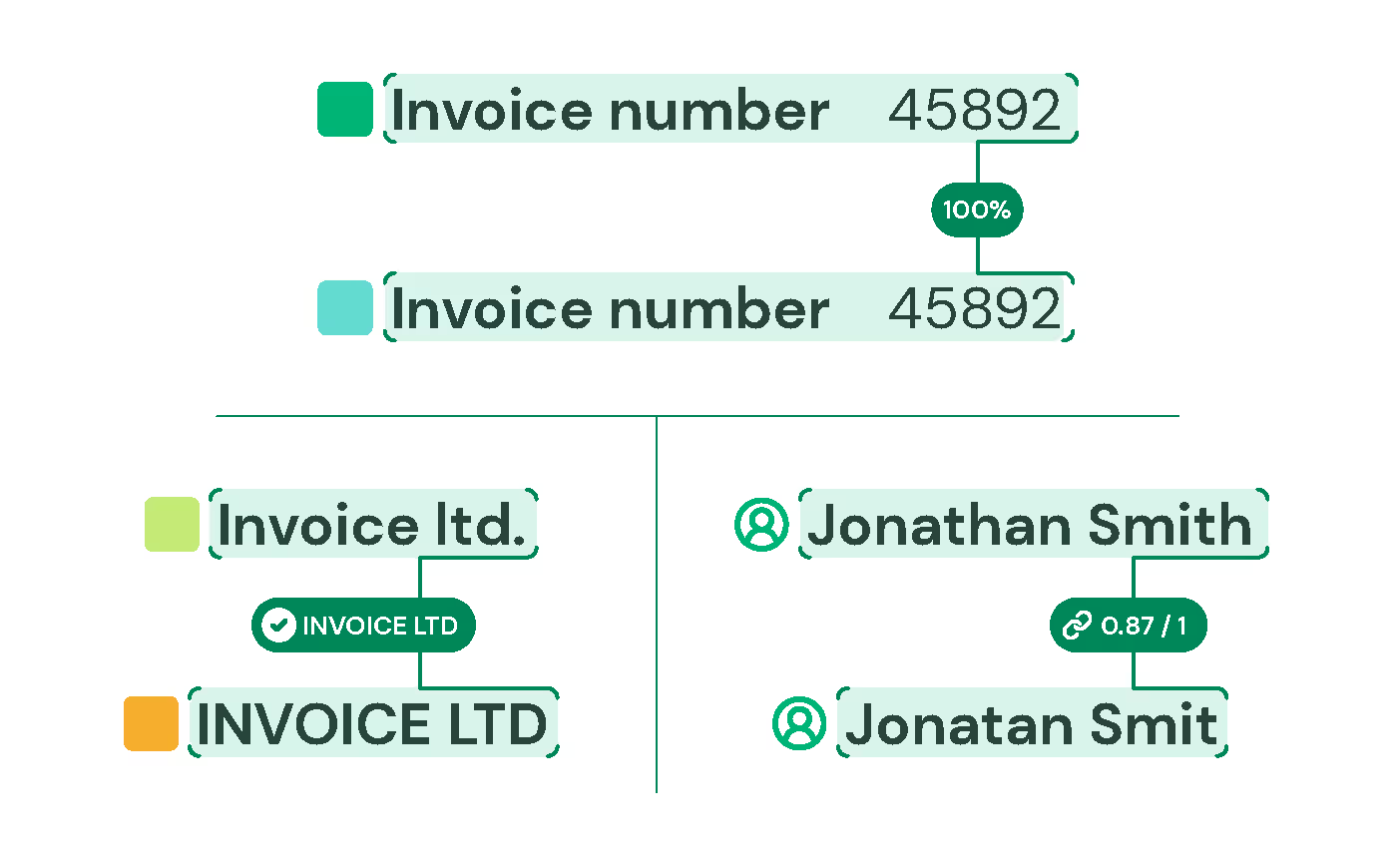

Compared at three levels:

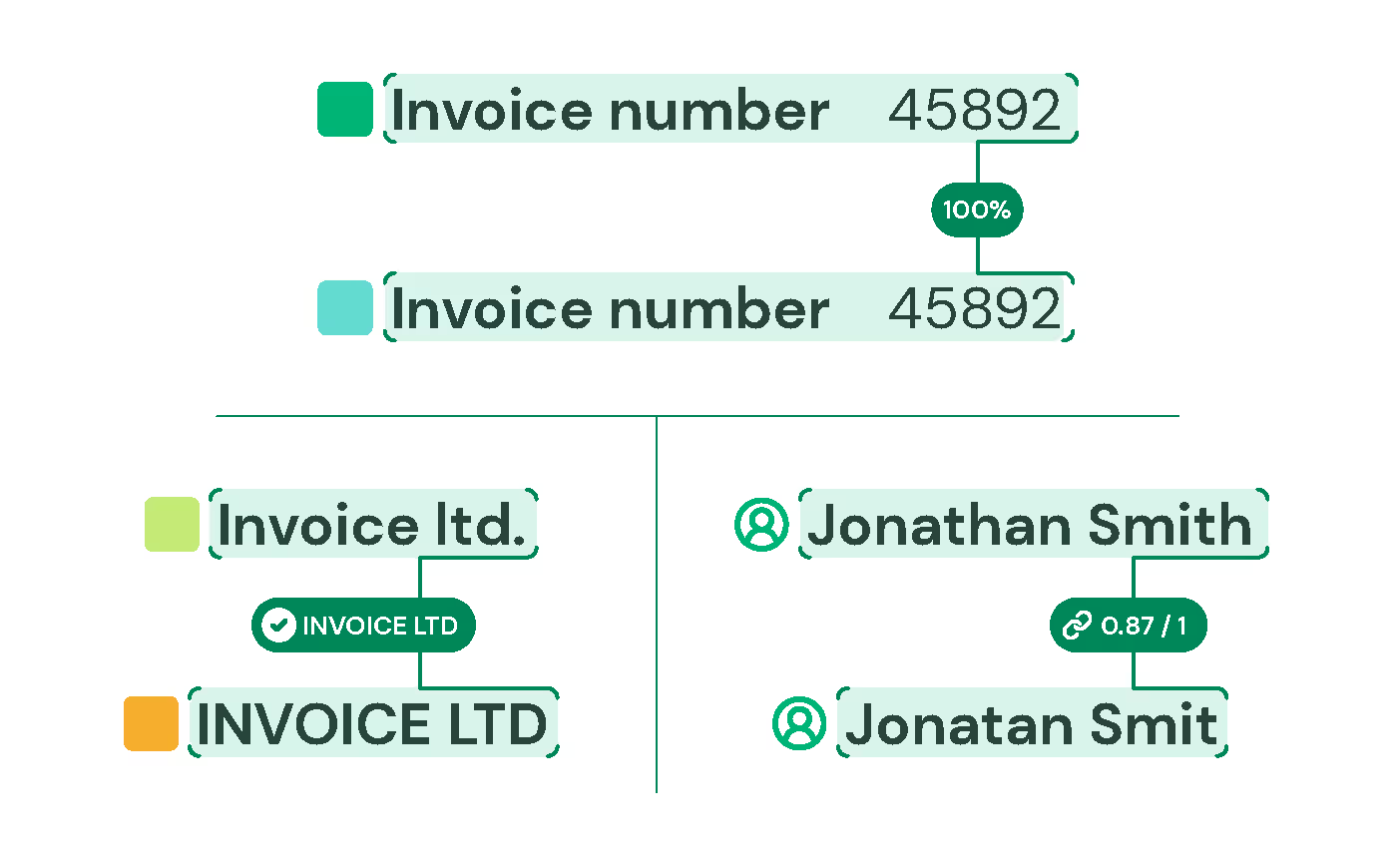

Exact Match: For critical fields like totals, IDs, or contract numbers, values must be 100% identical.

Normalized Match: Formatting differences (case, spaces, punctuation) are cleaned before comparison to avoid false mismatches.

Similarity Match (Levenshtein Distance): For flexible fields like names or addresses, we calculate how similar two strings are on a scale from 0–1.

Compared within tolerance ranges

Standardized to avoid time-zone mismatches.

Account for missing or unchecked states.

Evaluated by content, not order (unless order is business-critical).

Compared at three levels:

Exact Match: For critical fields like totals, IDs, or contract numbers, values must be 100% identical.

Normalized Match: Formatting differences (case, spaces, punctuation) are cleaned before comparison to avoid false mismatches.

Similarity Match (Levenshtein Distance): For flexible fields like names or addresses, we calculate how similar two strings are on a scale from 0–1.

Benchmark your current IDP or in-house system against Invofox — we’ll show you the data side by side.

Invofox tracks schema versions automatically, aligning field definitions across updates so results remain comparable. You’ll always know whether changes come from real performance gains or schema adjustments.

Yes. Every customer receives the full report plus all raw outputs. You can verify or replicate our results anytime for complete transparency.

Both systems process the same documents under identical rules and thresholds. Invofox then shares all results side by side for objective comparison.

Our process is designed for any high-volume, accuracy-critical workflow — including invoices, bank statements, mortgage packages, insurance forms, and more.